Augmenting First-Person Swarm Teleoperation with Multisensory Feedback

A haptic jacket and spatial audio system for teleoperating aerial drone swarms, translating swarm state into intuitive tactile and auditory feedback | HRI 2026

Project Overview

This research explores how multisensory feedback—combining haptic and spatial audio cues—can improve human teleoperation of aerial drone swarms. While top-down views naturally convey swarm state information, they're impractical for real-world deployment and create single points of failure. First-person views preserve the distributed nature of swarms but severely limit operator awareness of swarm dynamics. We developed a wearable haptic system and spatial audio interface to bridge this gap, translating complex swarm-state information into intuitive tactile and auditory patterns.

The Challenge

Effective swarm teleoperation requires operators to maintain situational awareness of multiple distributed agents simultaneously—a fundamental mismatch with human perception, which relies on a singular perspective. When controlling swarms from a first-person viewpoint, operators lose visibility of drones outside their immediate camera feed, making it difficult to detect fragmentation, anticipate collisions, or understand overall swarm cohesion.

Technical Approach

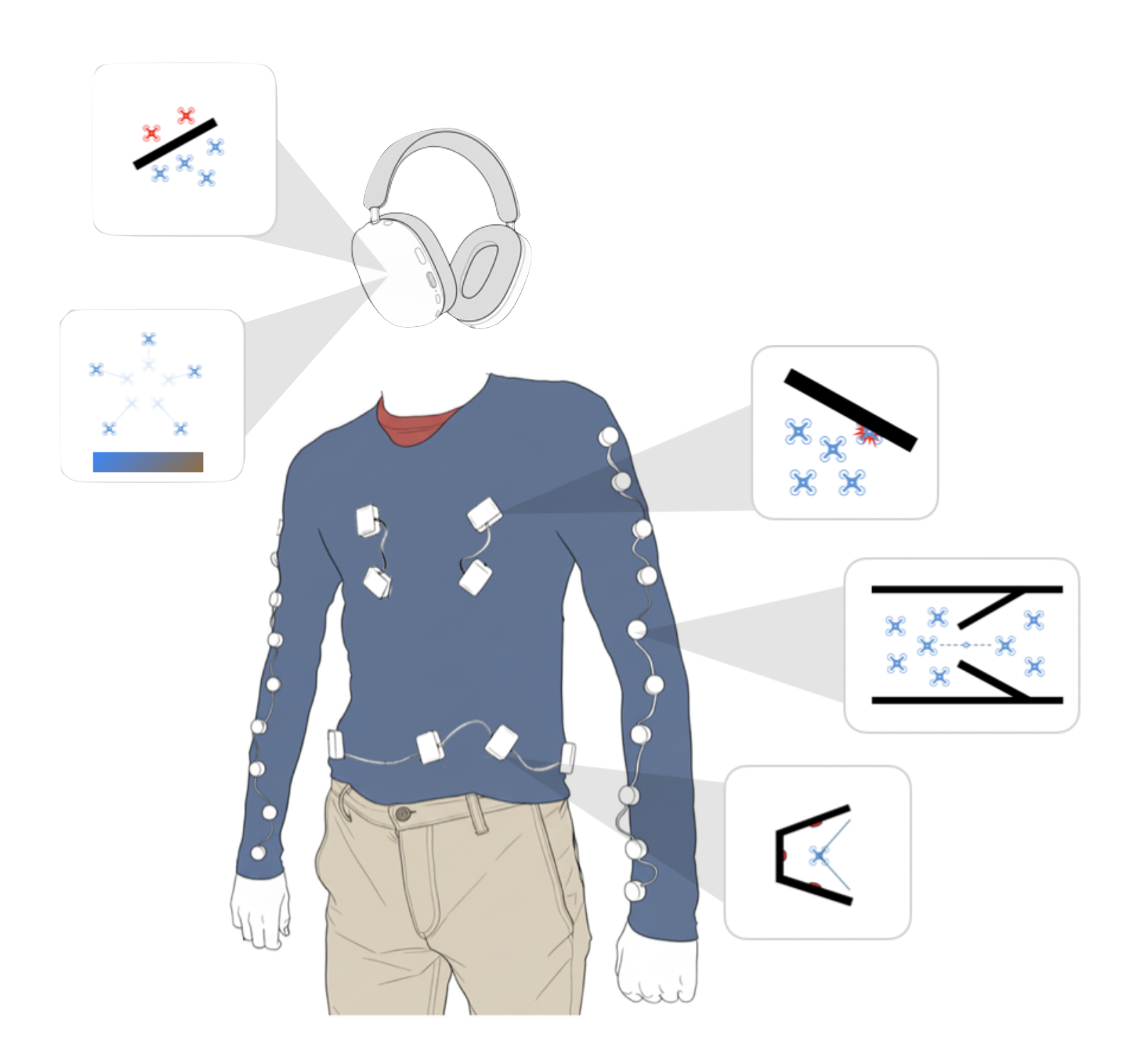

We built our system around the Olfati-Saber flocking algorithm, extracting meaningful metrics about swarm state: connectivity (cohesion and risk of splitting), inter-agent distance, drone isolation, predicted trajectories, and obstacle forces. These swarm-level behaviors were mapped to a custom haptic jacket with 32 vibrotactile actuators distributed across the torso, waist, and arms, complemented by spatial audio.

Haptic and Audio Mapping Strategy

The mapping design exploits distinct body regions to communicate different types of information:

- Arms (20 LRAs): Convey disconnection through vibrations that propagate from shoulder to hand, mimicking the sensation of something separating from the swarm.

- Torso (4 VCAs): Signal drone crashes with strong vibrations, providing immediate collision feedback.

- Waist (8 VCAs): Communicate obstacle forces directionally, allowing operators to "feel" environmental constraints.

- Spatial Audio: Isolated drones emit sound from their location in 3D space. Swarm spread changes are accompanied by shifting noise frequencies. Collisions trigger distinct alerts.

Experimental Validation

We conducted a study with 40 participants navigating a simulated 20-drone swarm through a complex obstacle course. Participants experienced two viewpoints (top-down and first-person) and two feedback conditions (visual-only versus multisensory), completing both basic navigation and object-collection tasks.

Key Findings

Top-down views achieved superior performance overall, but multisensory feedback significantly enhanced first-person teleoperation, particularly in cognitively demanding tasks:

- Crashes reduced by 49% in first-person collectible tasks (from 2.58 to 1.32 per flight)

- Isolation time decreased by 54% (from 10.08s to 4.67s)

- Completion time improved by 14% (from 177.5s to 152.6s)

- Mental workload reduced by 26% according to NASA-TLX assessments

The effect was most pronounced when visual resources were saturated—precisely when operators needed additional sensory channels most. Top-down perspectives showed minimal gains from haptics, likely because they already provided comprehensive spatial awareness.

Design Implications

This work demonstrates that sensory offloading through haptic and audio channels can effectively reduce cognitive burden when visual attention is saturated. Distributing different types of information across distinct body regions enables rapid discrimination without extensive training. While top-down views remain superior, multisensory feedback narrows the performance gap substantially, making first-person control viable for scenarios where top-down views aren't feasible—such as search and rescue, infrastructure inspection, or GPS-denied environments.

For more information, please see the PDF.