SoundSpindle: EEG Sonification for Sleep Staging

A system exploring EEG sonification to improve sleep staging performance for novice practitioners through auditory representation of brainwaves | PLoS 2025

Project Overview

Sleep staging—classifying brain activity during sleep into distinct physiological states—is a labor-intensive medical procedure performed over a million times annually in the United States. This research investigated whether adding an auditory representation (sonification) of EEG signals to standard visual displays could improve sleep staging accuracy or reduce cognitive workload. Through an online study with 40 participants, we found that EEG sonification significantly improved staging accuracy for novice practitioners, suggesting it may accelerate learning in inexperienced sleep stagers.

The Challenge

Manual sleep staging requires examining 30-second epochs of EEG, EOG, and EMG data to classify each segment as Wake, REM sleep, or non-REM stages 1-3. This demands specialized training and even highly trained stagers achieve only about 83% inter-rater agreement. While machine learning shows promise, regulatory agencies like the FDA emphasize human-in-the-loop verification for medical AI. Manual sleep staging will likely remain essential, making technologies that improve human performance critically important.

Approach

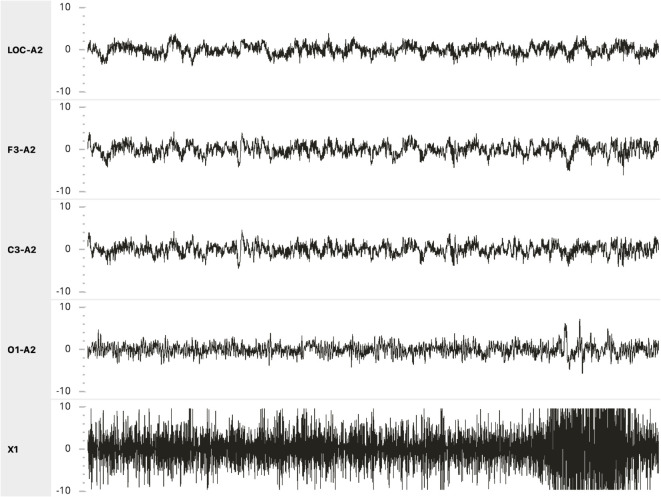

We used minimal transformation to preserve all EEG information while making it audible. The O1 EEG channel was converted to sound by downsampling to 100 Hz and speeding up by ~95%, compressing each 30-second visual epoch into a 1.36-second audio clip (20-1000 Hz). This simple approach—avoiding complex musical mappings—allowed participants' auditory systems to naturally identify relevant acoustic properties. The resulting sounds were qualitatively distinct for each sleep stage.

We conducted a within-subjects study where 40 participants completed sleep staging with and without sonified EEG. Participants ranged from novices (1-10 polysomnograms staged in the past year) to experts (80+). The interface displayed standard AASM-format sleep data while playing sonified EEG for the O1 channel. After a practice block with feedback, participants completed two counterbalanced test blocks of 30 epochs each.

Key Findings

Sonification did not improve accuracy, speed, or workload for the entire group. However, when stratified by experience, sonification significantly improved accuracy for the least experienced participants (those who had staged 1-10 polysomnograms). For this subgroup, Cohen's kappa agreement with expert scorers was significantly higher with sound (p = 0.01). No other experience group showed significant effects.

Despite lack of overall statistical effects, 30 of 40 participants reported finding sonification useful, and 39 indicated they would use a platform incorporating it.

Interpretation

The selective benefit for novices likely reflects a ceiling effect where experts already extract all available information visually, and a blocking effect where well-learned visual-stage associations prevent learning new sound-stage relationships. This contrasts with prior sonification research using novel tasks, and highlights the challenge of augmenting tasks where visual expertise already exists.

These findings suggest EEG sonification may be particularly valuable as a training tool for novice sleep stagers, potentially enabling faster progression to acceptable performance levels. The approach may also benefit non-specialists with limited sleep experience who need basic competency without extensive training.

For more information, please read the paper or access the data.