HapticHearing: A Haptic Feedback System for Complementing Speech Perception

A wearable multi-actuator haptic system that complements residual hearing with personalized tactile feedback for speech perception | ASSETS 2025

Project Overview

HapticHearing explores haptic feedback as a complementary channel for speech perception, particularly in scenarios where auditory information alone may be insufficient. The system translates phonemic information into spatiotemporal vibrotactile patterns, providing an additional sensory modality for understanding spoken language. This approach targets applications beyond traditional hearing aids and auditory amplification, investigating how touch can augment rather than replace auditory processing.

System Architecture

The HapticHearing pipeline processes speech in real-time through three main stages:

- Energy-based Feature Extraction: Audio input is analyzed to extract relevant acoustic features, with emphasis on speech-specific characteristics like formant structure and temporal envelope information.

- Vowel Embedding: Phonemic content is mapped into a reduced dimensional space, identifying key distinctions between speech sounds that can be effectively communicated through touch.

- Haptics Customization Stage: The phonemic representation is translated into spatiotemporal vibrotactile patterns using an "audiogram + QuickSIN" approach, potentially allowing personalization based on individual hearing profiles.

The system connects through a custom USB haptic driver to wearable devices, enabling real-time rendering with minimal latency.

Hardware Development

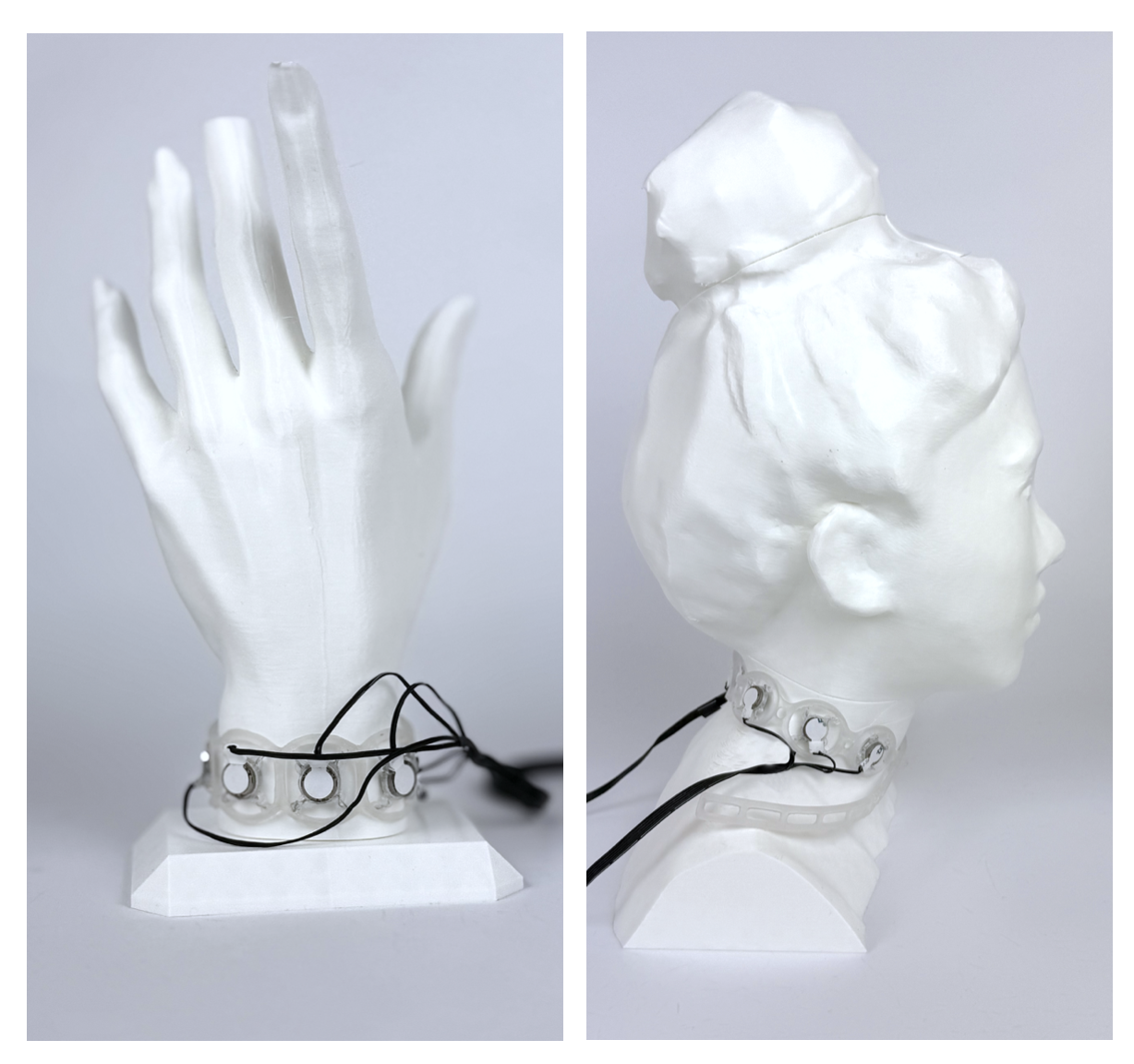

We developed multi-actuator haptic devices in three wearable form factors to explore optimal body locations for speech-related feedback:

- Bracelet (A): Wrist-worn configuration providing spatial patterns across the forearm

- Necklace (B): Collar-mounted design targeting the neck and upper chest area

- Over-ear (C): Temporal bone placement leveraging proximity to the auditory system

Each form factor uses the same underlying electronics and signal processing pipeline, allowing direct comparison of effectiveness across wearing locations. The devices integrate custom PCB designs with multiple vibrotactile actuators arranged to provide spatially distinct tactile cues.

Psychophysical Validation

We conducted a psychophysical study with 9 participants to validate device performance across the three form factors. The evaluation measured two key metrics:

- Vibration Detection Thresholds: Determining the minimum perceptible vibration intensity for each actuator location across device types

- Spatial Localization Accuracy: Assessing users' ability to identify which actuator is active and distinguish between adjacent actuators by motor position

Results showed variation in both detection thresholds and localization accuracy across device types, with the bracelet configuration generally showing the highest accuracy. These findings inform optimal actuator placement and signal intensity calibration for speech-related haptic rendering.

Technical Contributions

HapticHearing makes three key contributions to haptic speech augmentation:

- Multi-form-factor hardware platform: Systematic comparison of wearable configurations for speech-related haptic feedback

- Real-time processing pipeline: End-to-end system for translating phonemic content into haptic patterns with minimal latency

- Psychophysical validation: Empirical data on detection thresholds and spatial resolution across body locations

The system demonstrates that speech information can be effectively rendered through touch, though significant questions remain about optimal encoding strategies and application contexts.

For more information, please read the paper or see the poster.